Are you looking for an efficient and user-friendly way to manage your Azure resources? Azure Cloud Shell presents a powerful solution for interacting with Azure through a web browser. It allows developers and system administrators to work seamlessly in Azure environments without needing to rely on heavy graphical interfaces or complex local setups. If you’ve already ventured into Microsoft Azure and utilized various services like virtual machines (VMs) and cloud applications, you might be familiar with the Azure portal. However, managing Azure resources through the portal’s graphical interface can often be cumbersome and less intuitive. This is where Azure Cloud Shell shines, offering an easy and flexible method to manage your Azure resources with just a web browser.

Are you tired of navigating through the complex and ever-changing Azure portal? You’re not alone. As new updates and features are continuously rolled out, the user interface can become overwhelming, making it difficult to find what you’re looking for. Azure Cloud Shell offers a streamlined solution by enabling you to manage Azure resources directly through the command line, using either PowerShell or Bash. Let’s dive deeper into Azure Cloud Shell and explore how it works, its features, and why it’s an invaluable tool for Azure users.

Understanding Azure Cloud Shell: A Powerful Tool for Managing Azure Resources

Azure Cloud Shell is a web-based command-line interface that provides users with an intuitive environment to manage and interact with Microsoft Azure resources. This tool eliminates the need for complex local setups or installations, as it allows you to work directly from your browser. Whether you’re managing infrastructure, deploying applications, or automating tasks, Azure Cloud Shell offers a seamless and flexible solution to perform a wide range of tasks in the Azure ecosystem.

At its core, Azure Cloud Shell is a cloud-based shell environment that supports both PowerShell and Bash. This flexibility ensures that you can choose the command-line environment that best fits your preferences or work requirements. Both PowerShell and Bash are popular scripting environments, with PowerShell being favored by Windows-based administrators and Bash being widely used by Linux users. Azure Cloud Shell allows users to switch between these environments with ease, offering a consistent experience across different platforms.

One of the standout features of Azure Cloud Shell is its ability to operate entirely in the cloud, which means you no longer need to worry about the complexities of installing and configuring command-line tools locally. Azure Cloud Shell is pre-configured with all the necessary tools and dependencies, so you can jump straight into managing your Azure resources without worrying about maintaining the environment or dealing with updates.

Key Features of Azure Cloud Shell

1. No Local Setup Required

Azure Cloud Shell removes the need for any local software installation, making it incredibly user-friendly. Whether you’re using PowerShell or Bash, everything you need to interact with Azure is already available in the cloud. This is particularly beneficial for users who may be working in environments with limited access to install software or for those who want to avoid the hassle of managing dependencies and updates.

2. Pre-configured Tools and Environments

Azure Cloud Shell comes with a suite of pre-configured tools that make it easier to manage your Azure resources. Tools such as Azure PowerShell, Azure CLI, Git, Kubernetes kubectl, and Docker are all integrated into the Cloud Shell environment. These tools are kept up-to-date automatically, meaning you don’t have to worry about installing new versions or dealing with compatibility issues.

By providing these pre-installed tools, Azure Cloud Shell simplifies the process of managing Azure resources. You can quickly execute commands to configure virtual machines, manage storage, deploy containers, or automate workflows. The environment is designed to minimize setup time, enabling you to focus on the tasks that matter most.

3. Persistent Storage

While Azure Cloud Shell is designed to be a temporary environment, it also offers a persistent storage feature. This means you can save files, scripts, and other resources that you work with directly in the cloud. Each user is allocated 5 GB of free persistent storage, ensuring that you have enough space to store important files between sessions.

When you work in Azure Cloud Shell, your session is automatically linked to an Azure file share, which enables you to save and retrieve files at any time. This persistent storage ensures that any work you do within Cloud Shell is not lost, even if your browser session is closed.

4. Access to Azure Resources

With Azure Cloud Shell, you can easily interact with all of your Azure resources directly from the command line. From creating and configuring virtual machines to managing storage accounts, networking, and databases, Cloud Shell gives you full control over your Azure environment. The shell integrates seamlessly with Azure services, making it a versatile and convenient tool for developers, administrators, and IT professionals.

5. Cross-Platform Compatibility

Azure Cloud Shell works directly in the browser, meaning you don’t need to worry about operating system compatibility. Whether you’re using Windows, macOS, or Linux, you can access and use Azure Cloud Shell from any device with an internet connection. This cross-platform compatibility ensures that you can work seamlessly from multiple devices and environments.

Additionally, because everything runs in the cloud, you can access your Cloud Shell environment from anywhere, making it ideal for remote work or accessing your Azure environment while traveling. All you need is a browser and an internet connection.

Benefits of Using Azure Cloud Shell

1. Simplified Azure Resource Management

Azure Cloud Shell provides a streamlined way to manage Azure resources through the command line. Instead of manually configuring and managing individual tools and services, Cloud Shell gives you access to a fully integrated environment that simplifies many of the common administrative tasks. From managing Azure Active Directory to creating and managing virtual networks, you can accomplish complex tasks with just a few commands.

Moreover, Cloud Shell enables you to automate repetitive tasks using scripts, which saves you time and reduces the chances of human error. Azure Cloud Shell is particularly useful for system administrators and DevOps engineers who frequently need to interact with Azure resources in an efficient and automated way.

2. Security and Access Control

Since Azure Cloud Shell operates within your Azure environment, it benefits from the security features and access controls already set up within your Azure subscription. All Cloud Shell sessions are tied to your Azure account, so you can leverage Azure Active Directory (AAD) authentication and role-based access control (RBAC) to restrict access to certain resources.

Furthermore, all interactions within Cloud Shell are logged, enabling you to maintain a secure audit trail of actions taken within your Azure environment. This logging and security integration make Azure Cloud Shell a safe and compliant option for managing Azure resources.

3. Free and Scalable

Azure Cloud Shell offers a free tier with 5 GB of persistent storage, which is more than enough for most users to store their scripts, configuration files, and other resources. For more storage, you can also expand your cloud storage options by linking your Cloud Shell to an external Azure file share.

Additionally, because it’s hosted in the cloud, Azure Cloud Shell scales automatically based on your needs. Whether you’re running a few simple commands or managing complex workloads, Cloud Shell provides a flexible environment that adapts to your specific requirements.

4. Support for Automation and Scripting

For users involved in automation and scripting, Azure Cloud Shell is an indispensable tool. With support for both PowerShell and Bash, Cloud Shell allows you to write and execute scripts that automate routine tasks, such as provisioning virtual machines, configuring networks, and deploying applications. You can save these scripts in the persistent storage to reuse them later, making it easy to replicate configurations and setups across different environments.

How to Get Started with Azure Cloud Shell

Getting started with Azure Cloud Shell is straightforward. To use Azure Cloud Shell, simply navigate to the Azure portal and click on the Cloud Shell icon located at the top of the page. If it’s your first time using Cloud Shell, you’ll be prompted to choose between PowerShell and Bash. Once you’ve selected your environment, Cloud Shell will initialize and give you access to a full command-line interface with all the tools you need.

As soon as you access Cloud Shell, you can start executing commands and interacting with your Azure resources. You can even upload files to Cloud Shell, save your scripts, and perform more complex tasks, all from within your browser. Because Cloud Shell is tightly integrated with the Azure portal, you can easily switch between your Cloud Shell environment and the Azure portal as needed.

How to Access Azure Cloud Shell: A Complete Guide

Azure Cloud Shell is a powerful, browser-based tool that allows you to manage and interact with your Azure resources from anywhere. Whether you are a system administrator, a developer, or an IT professional, Cloud Shell provides an efficient command-line interface to perform Azure-related tasks. There are two primary methods to access Azure Cloud Shell, each offering a straightforward and user-friendly experience.

Accessing Azure Cloud Shell

1. Direct Access via Browser

Accessing Azure Cloud Shell is incredibly easy via your browser. To get started, you need to visit the Azure Cloud Shell website by navigating to Once the page loads, you will be prompted to sign in using your Azure account credentials. After logging in, you’ll be able to choose your preferred shell environment. Azure Cloud Shell supports two popular shell options: PowerShell and Bash. After selecting your desired shell, you’re ready to begin managing your Azure resources through the command line.

2. Using the Azure Portal

Another convenient way to access Azure Cloud Shell is directly through the Azure portal. To do so, log into your Azure account at the Azure Portal. Once logged in, look for the Cloud Shell icon located at the top-right corner of the page. The icon looks like a terminal prompt. When you click on it, a new session of Azure Cloud Shell will open at the bottom of the portal page. From there, you will have immediate access to your Azure resources using the shell interface.

3. Using Visual Studio Code

If you are a developer who uses Visual Studio Code, you can also integrate Azure Cloud Shell with this popular code editor. By installing the Azure Account extension in Visual Studio Code, you can open Cloud Shell sessions directly from within the editor. This feature allows developers to streamline their workflow by managing Azure resources while coding in a single interface, making the process more seamless and productive.

Key Features of Azure Cloud Shell

Azure Cloud Shell is equipped with a variety of features designed to improve the management of Azure resources and enhance your productivity. Let’s explore some of the key features that make Azure Cloud Shell a standout tool:

1. Persistent $HOME Across Sessions

One of the notable benefits of Azure Cloud Shell is that it provides persistent storage for your $HOME directory. Each time you use Cloud Shell, it automatically attaches an Azure file share. This means that your files and configurations are saved across different sessions, making it easier to pick up where you left off, even after logging out and back in. You don’t need to worry about losing important files, as they remain available every time you access the Cloud Shell environment.

2. Automatic and Secure Authentication

Azure Cloud Shell streamlines the process of authentication with its automatic login feature. When you log in to Cloud Shell, your Azure credentials are automatically authenticated, eliminating the need to enter them each time you access the environment. This feature enhances security by minimizing the risk of exposing credentials, and it also saves time, allowing you to focus more on the tasks at hand rather than repeatedly entering login details.

3. Azure Drive (Azure:)

The Azure drive is a unique feature in Azure Cloud Shell that makes managing Azure resources more intuitive. By using commands like cd Azure:, you can quickly navigate to your Azure resources, including virtual machines, storage accounts, networks, and other services. This allows you to interact with your resources directly through the shell without needing to switch between different interfaces or consoles.

4. Integration with Open-Source Tools

Azure Cloud Shell integrates seamlessly with several popular open-source tools, including Terraform, Ansible, and Chef InSpec. These tools are often used by developers and IT administrators to manage infrastructure and automate workflows. With Cloud Shell’s native support for these tools, you can execute commands and manage your infrastructure within the same environment without having to set up external configurations or installations.

5. Access to Essential Tools

Azure Cloud Shell comes with a set of essential tools pre-installed, so you don’t have to worry about setting them up yourself. Key tools include:

- Azure CLI: The Azure Command-Line Interface is available in Cloud Shell to manage Azure resources.

- AzCopy: This command-line utility helps you copy data to and from Azure Storage.

- Kubernetes CLI (kubectl): You can use kubectl to manage Kubernetes clusters directly within Cloud Shell.

- Docker: Cloud Shell also includes Docker for container management.

- Text Editors: Whether you prefer vim or nano, you can use these text editors to edit scripts or configurations directly within Cloud Shell.

By having all these tools readily available, Azure Cloud Shell saves you time and effort, ensuring you can complete tasks without the need for additional installations.

6. Interactive and User-Friendly Interface

Azure Cloud Shell has been designed with user experience in mind. The interface is intuitive, providing an accessible experience for both novice users and seasoned professionals. Features like command history and tab completion enhance productivity by making it easy to recall past commands and complete partial commands automatically, reducing errors and speeding up the workflow.

7. Pre-Configured Environment

Azure Cloud Shell stands out because it eliminates the need for manual configuration. The environment is fully pre-configured with everything you need to start managing your Azure resources. Whether it’s the shell environment itself, the Azure CLI, or a set of development tools, Cloud Shell is ready to use right out of the box. This convenience ensures that you can get to work immediately without spending time configuring and setting up the environment.

Benefits of Using Azure Cloud Shell

1. Accessibility Anywhere, Anytime

Azure Cloud Shell is a browser-based tool, which means you can access it from anywhere, as long as you have an internet connection. There’s no need to install or maintain local tools or worry about platform compatibility. You can securely access your Azure environment and perform tasks on the go, making it an ideal tool for IT administrators and developers who need flexibility in their workflows.

2. Time-Saving Pre-Configured Environment

One of the biggest advantages of Azure Cloud Shell is its pre-configured environment. This means that the typical setup time for local development environments is drastically reduced. Cloud Shell allows you to focus on managing resources and developing your projects, without worrying about the underlying infrastructure or software installation.

3. Secure and Efficient

The security and efficiency of Azure Cloud Shell are enhanced by its automatic authentication and persistent storage features. These capabilities reduce the risk of security breaches while ensuring that your work is saved and accessible whenever you need it. Additionally, since everything is integrated with Azure’s security framework, Cloud Shell automatically benefits from the protections built into Azure, such as identity and access management (IAM), multi-factor authentication (MFA), and data encryption.

4. Cost-Effective

Since Azure Cloud Shell is a fully managed service provided by Azure, you don’t need to worry about the costs associated with provisioning and maintaining infrastructure. You only pay for the storage used by the file share, and the compute resources are billed at a minimal cost. This makes Cloud Shell a cost-effective solution for businesses of all sizes, allowing you to reduce overhead and focus your resources on more strategic tasks.

The Benefits of Using Azure Cloud Shell for Efficient Cloud Management

Azure Cloud Shell is a powerful, browser-based command-line interface that significantly enhances the way users manage their Azure resources. It offers a plethora of benefits for IT professionals, system administrators, and developers who need an efficient and streamlined way to interact with the Azure cloud environment. This tool eliminates the complexities associated with setting up and maintaining command-line environments, offering a straightforward, reliable way to perform critical tasks. Here are some of the primary advantages of using Azure Cloud Shell.

1. No Installation or Configuration Hassles

One of the most significant advantages of Azure Cloud Shell is that it requires no installation or configuration. Traditionally, using command-line interfaces like PowerShell or Bash involves installing software, configuring dependencies, and maintaining versions. However, Azure Cloud Shell eliminates these concerns by providing an environment where everything is pre-installed and configured. This means that you don’t have to worry about updates, dependency issues, or managing software installations. You can access and start using the tool immediately after logging in to your Azure portal, saving you valuable time and effort.

By abstracting away the need for local installations and configurations, Azure Cloud Shell makes the process of managing Azure resources simpler and more accessible for users at all levels. Whether you’re an experienced developer or a beginner, this feature enhances your overall experience by allowing you to focus on your tasks rather than setup.

2. Cross-Platform Compatibility

Azure Cloud Shell is designed to be fully compatible across a wide range of platforms. Since it operates entirely within your browser, it works seamlessly on different operating systems, including Windows, macOS, and Linux. Regardless of the operating system you’re using, you can access and interact with your Azure environment without any compatibility issues.

This cross-platform compatibility is particularly beneficial for teams that have diverse infrastructure environments. Developers and IT administrators can work on any system, whether they are on a Windows desktop or a macOS laptop, and still have full access to Azure Cloud Shell. It creates a unified experience across different devices and platforms, making it easier for users to switch between machines and continue their work.

3. Flexibility in Shell Environment Choices

Azure Cloud Shell provides users with the flexibility to choose between two different shell environments: PowerShell and Bash. This choice allows you to work in the environment that best suits your preferences or the requirements of the task at hand.

For instance, PowerShell is favored by many administrators in Windows-based environments due to its rich set of cmdlets and integrations. Bash, on the other hand, is popular among developers and users working in Linux-based environments or those who prefer a more traditional Unix-style command-line interface. Azure Cloud Shell supports both, giving you the freedom to use either PowerShell or Bash based on your needs.

This flexibility ensures that whether you are running Windows-based commands or interacting with Azure in a more Linux-centric manner, you have the ideal environment at your fingertips. This dual-environment support also helps bridge the gap between different development ecosystems, making it easier for teams to collaborate regardless of their platform preferences.

4. Seamless Integration with Azure Resources

Azure Cloud Shell integrates directly with Azure, making it incredibly easy to access and manage resources like virtual machines, storage accounts, networks, and other cloud services. The seamless integration means that you can run commands and scripts directly within the Azure environment without having to switch between different tools or interfaces.

Azure Cloud Shell also supports common Azure commands, which simplifies the process of interacting with your resources. You can execute tasks like provisioning infrastructure, managing access control, or configuring networking settings, all from the same interface. The integration with Azure’s native services ensures that you can manage your entire cloud infrastructure without needing to leave the Cloud Shell interface, improving productivity and streamlining workflows.

5. Cost-Effective Solution for Cloud Management

Azure Cloud Shell offers a cost-efficient approach to managing your cloud resources. Unlike traditional setups where you would need to invest in powerful hardware or virtual machines to run command-line tools, Cloud Shell operates in the cloud. This means that you only pay for the resources you consume, such as the Azure file share used to store your data and scripts.

With Azure Cloud Shell, there’s no need for heavy investments in local machines or servers to run your command-line tools. The service is optimized to run in a cloud environment, meaning you get all the power of a full-fledged command-line interface without the overhead costs. This pay-as-you-go model helps reduce unnecessary expenses, making Azure Cloud Shell a smart choice for businesses looking to manage their cloud resources in a cost-effective manner.

Additionally, the tool’s automatic management and upkeep of resources mean that businesses can avoid the operational costs associated with maintaining local software and infrastructure, contributing to overall cost savings in the long term.

6. Accessibility from Anywhere

Since Azure Cloud Shell is entirely cloud-based, you can access it from virtually anywhere, as long as you have an internet connection. This makes it a highly convenient tool for teams that need to work remotely or access their Azure resources while on the go. You don’t need to worry about being tied to a specific device or location, as Cloud Shell is accessible through any modern browser.

This accessibility is particularly beneficial for distributed teams or individuals who need to manage resources while traveling. Whether you’re in the office, at home, or on a business trip, you can access your Azure environment and continue your work uninterrupted. Azure Cloud Shell’s cloud-based nature ensures that your resources are always within reach, helping you stay productive regardless of your physical location.

7. Rich Support for DevOps and Automation Tools

Azure Cloud Shell is not just a basic command-line tool—it’s equipped with a suite of powerful features that make it ideal for DevOps workflows and automation tasks. The environment includes pre-installed tools such as the Azure Functions CLI, Terraform, Kubernetes, Ansible, and Docker, which are all designed to facilitate the development, deployment, and management of cloud applications.

For developers and DevOps professionals, these tools provide the ability to automate routine tasks, manage containerized applications, and interact with infrastructure as code. With the integrated Azure Cloud Shell, you can automate deployments, manage infrastructure changes, and deploy applications with ease, making it a go-to tool for modern cloud-based development practices.

This deep support for automation tools enables you to integrate Cloud Shell into your DevOps pipeline, streamlining workflows and improving collaboration between development and operations teams. Whether you are working with infrastructure as code, orchestrating containers, or automating resource provisioning, Azure Cloud Shell provides the tools you need to execute these tasks efficiently.

8. Easy Access to Cloud Resources and Quick Setup

Using Azure Cloud Shell simplifies the process of setting up and managing cloud resources. There’s no need for manual configurations or complex setup procedures. The environment is pre-configured, meaning users can jump straight into managing their resources without spending time setting up the system or installing additional software.

Moreover, Azure Cloud Shell is tightly integrated with the Azure portal, which provides easy access to all of your cloud resources and management features. The cloud shell’s integration with the portal ensures that you can quickly execute commands and scripts while also taking advantage of the Azure portal’s graphical user interface for any tasks that require visual management.

Introduction to Azure Cloud Shell

Azure Cloud Shell is a cloud-based solution provided by Microsoft that offers a flexible and cost-efficient way for users to manage their Azure resources directly from a web browser. Unlike traditional cloud environments, it eliminates the need for upfront investment in hardware or long-term commitments. Azure Cloud Shell provides an easy-to-use interface for administrators, developers, and IT professionals to interact with Azure services, perform administrative tasks, and manage cloud resources without the need to set up complex infrastructure.

One of the major benefits of Azure Cloud Shell is its pay-as-you-go pricing model, which ensures that users only incur costs for the resources they actively use. This pricing structure makes it an attractive option for both small-scale and enterprise-level operations. Additionally, Azure Cloud Shell provides integrated access to Azure Files, a managed file storage service, which helps users store data efficiently while taking advantage of cloud storage features like high durability and redundancy.

Understanding Pricing for Azure Cloud Shell

Azure Cloud Shell is structured to provide users with flexibility, allowing them to use only the resources they need, without any significant upfront costs. The service focuses primarily on the cost associated with storage transactions and the amount of data transferred between storage resources. Below, we’ll explore the main factors that influence the pricing of Azure Cloud Shell and its associated storage services.

No Upfront Costs

One of the key advantages of Azure Cloud Shell is the absence of upfront costs. There is no need to purchase or rent physical hardware, and users do not need to commit to long-term contracts. This means that you pay based on usage, making it easy to scale up or down as needed.

Primary Cost Components

The primary cost drivers for Azure Cloud Shell are storage transactions and data transfer. Azure Files, which is the file storage service used in conjunction with Cloud Shell, incurs charges based on the number of storage transactions you perform and the amount of data transferred. These charges are typically associated with actions like uploading and downloading files, as well as interacting with the file system.

Types of Storage Available

Azure Cloud Shell uses locally redundant storage (LRS), which is designed to ensure high durability and availability for your files. LRS ensures that your data is replicated within the same region, providing redundancy in case of hardware failure. The storage tiers available under Azure Files are designed to suit different use cases, and each tier has its own pricing structure:

- Premium Storage:

Premium storage is ideal for I/O-intensive workloads that require low latency and high throughput. If your Azure Cloud Shell usage involves high-performance tasks, such as running complex applications or processing large datasets, the Premium storage tier is best suited to your needs. While this tier offers excellent performance, it comes at a higher cost compared to other options due to its superior speed and responsiveness. - Transaction Optimized Storage:

The Transaction Optimized tier is designed for workloads that involve frequent transactions but are not as sensitive to latency. This tier is suitable for applications where the volume of read and write operations is high, but the system doesn’t necessarily require immediate or real-time responses. This makes it an ideal choice for databases and other systems where transaction processing is the focus, but latency isn’t as critical. - Hot Storage:

The Hot Storage tier is a good fit for general-purpose file-sharing scenarios where the data is frequently accessed and updated. If your cloud shell usage includes regularly accessing and sharing files, this tier ensures that your files are quickly available. Hot storage is optimized for active data that needs to be accessed often, ensuring efficiency in performance. - Cool Storage:

For situations where data access is infrequent, the Cool Storage tier provides a more cost-effective solution for archiving and long-term storage. This tier is designed for data that does not need to be accessed frequently, such as backup files, logs, and historical data. While the access time may be slightly slower compared to the Hot tier, Cool storage is priced more affordably, making it a great option for archival purposes.

Key Features of Azure Cloud Shell

In addition to its flexible pricing structure, Azure Cloud Shell offers several features that enhance its usability and functionality:

- Integrated Environment: Azure Cloud Shell integrates both Azure PowerShell and Azure CLI in a single environment, allowing users to work with both interfaces seamlessly. This is particularly useful for those who prefer working in different command-line environments or need to execute scripts that utilize both tools.

- Pre-configured Tools: The environment comes pre-configured with a set of commonly used tools, including text editors, Git, Azure Resource Manager (ARM) templates, and Kubernetes command-line utilities. These tools are available out-of-the-box, saving users time and effort in setting up the environment.

- Persistent Storage: One of the key features of Azure Cloud Shell is the ability to persist data. While Cloud Shell itself is ephemeral, the Azure Files storage used to store data remains persistent. This means that any files you upload or create are available across sessions and can be accessed at any time.

- Scalability and Flexibility: Azure Cloud Shell is highly scalable, and users can work on a variety of cloud management tasks, ranging from basic resource configuration to complex application deployments. This scalability ensures that Cloud Shell is suitable for both small developers and large enterprises.

- Security: Azure Cloud Shell benefits from the robust security mechanisms provided by Azure. This includes data encryption, both in transit and at rest, ensuring that your data remains secure while interacting with Azure services.

Learning Azure Cloud Shell

Azure Cloud Shell is designed to be user-friendly, and Microsoft offers a range of resources to help both beginners and experienced professionals get up to speed quickly. Here are several ways you can learn to use Azure Cloud Shell effectively:

- Microsoft Tutorials and Documentation:

Microsoft provides comprehensive documentation for both Azure PowerShell and Azure CLI, detailing all the necessary commands and procedures to manage Azure resources. These tutorials cover everything from basic usage to advanced configurations, helping users master the platform at their own pace. - Hands-On Learning with Azure Cloud Shell Playground:

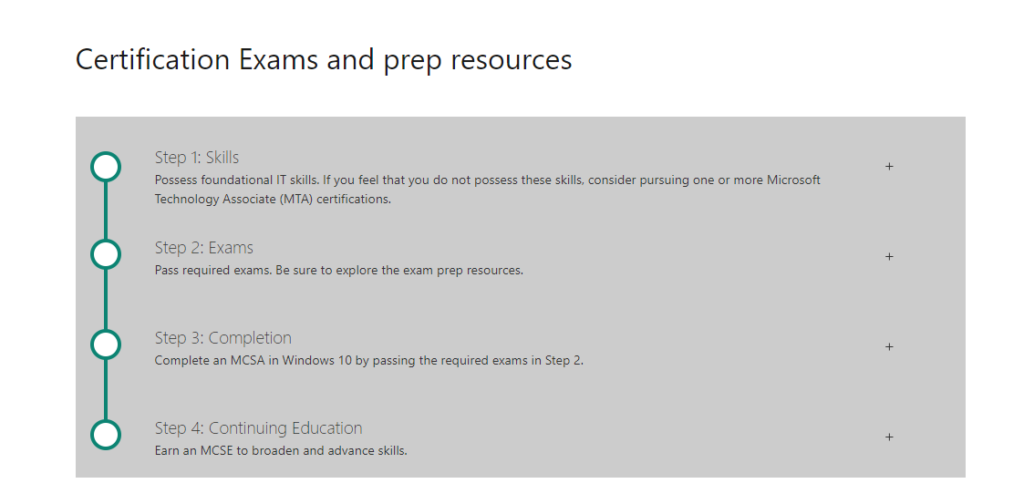

For those who prefer practical experience, the Azure Cloud Shell Playground offers an interactive learning environment. It allows users to practice managing Azure resources, executing commands, and exploring real-world use cases in a controlled, risk-free environment. - Online Courses and Certifications:

If you’re looking to dive deeper into Azure and become certified in Azure management, Microsoft offers various online courses and certifications. These courses cover a wide range of topics, from basic cloud management to advanced cloud architecture and DevOps strategies. Certifications such as the Microsoft Certified: Azure Fundamentals and Microsoft Certified: Azure Solutions Architect Expert are valuable credentials that demonstrate your proficiency with Azure. - Community and Support:

Azure Cloud Shell has an active community of users and experts who frequently share tips, best practices, and solutions to common problems. You can participate in online forums, discussion boards, or attend events like Microsoft Ignite to connect with other Azure enthusiasts.

Conclusion

A Comprehensive Guide to Azure Cloud Shell: Manage Your Azure Resources Effortlessly via Browser

Azure Cloud Shell stands out as a powerful, browser-based management tool that brings flexibility, accessibility, and ease of use to anyone working with Microsoft Azure. Whether you’re an experienced IT professional, a developer, or someone just beginning your cloud journey, Azure Cloud Shell simplifies the process of managing Azure resources by offering a pre-configured, on-demand command-line environment accessible from virtually anywhere.

One of the most compelling advantages of Azure Cloud Shell is its accessibility. Users can launch the shell directly from the Azure portal or from shell.azure.com, using nothing more than a browser. There is no need to install software or configure local environments, which reduces setup time and ensures consistent behavior across devices. This level of convenience makes it an ideal choice for cloud professionals who are on the move or working remotely.

In terms of capabilities, Azure Cloud Shell provides access to both Azure PowerShell and Azure CLI, which are the two most widely used interfaces for interacting with Azure services. This dual-environment support allows users to choose the tool that suits their workflow best or to alternate between them as needed. In addition, the environment comes equipped with popular development and management tools, such as Git, Terraform, Kubernetes tools, and various text editors. This rich toolset allows users to write, test, and deploy code directly from the shell environment.

Another critical feature of Azure Cloud Shell is its integration with Azure Files. When you first use Cloud Shell, Microsoft automatically provisions a file share in Azure Files to store your scripts, configuration files, and other data. This persistent storage ensures that your files are saved across sessions and accessible whenever you need them. It also enables more advanced workflows, such as storing automation scripts or using version control with Git directly within Cloud Shell.

From a cost perspective, Azure Cloud Shell is designed to be budget-friendly. There are no charges for using the shell itself, and the only costs incurred relate to the underlying storage and data transfer. Microsoft offers multiple storage tiers—including Premium, Transaction Optimized, Hot, and Cool—to meet varying performance and cost requirements. This approach enables users to tailor their cloud environment based on specific use cases, whether they require high-speed operations or long-term archiving.

When it comes to learning and support, Azure Cloud Shell is backed by Microsoft’s extensive documentation, tutorials, and online courses. Whether you’re looking to understand the basics of Azure CLI or dive deep into scripting with PowerShell, there are ample resources to guide your learning. Additionally, Microsoft provides hands-on labs through the Cloud Shell Playground, enabling users to gain practical experience in a safe, interactive environment.

In summary, Azure Cloud Shell represents a modern, efficient, and highly accessible way to manage Azure resources. It removes many of the traditional barriers to entry in cloud management by offering a seamless, browser-based interface, pre-loaded tools, and persistent cloud storage. Combined with flexible pricing and robust support resources, Azure Cloud Shell empowers users to control and automate their Azure environments with greater ease and confidence. Whether you’re managing simple workloads or orchestrating complex cloud infrastructures, Azure Cloud Shell equips you with the tools and flexibility to succeed in today’s dynamic cloud landscape.